Text-to-SQL

A survey on deep learning approaches for Text-to-SQL

Motivation

To bridge the gap between users and data, numerous text-to-SQL systems have been developed that allow users to pose natural language questions over relational databases. Recently, novel text-to-SQL systems are adopting deep learning methods with very promising results. At the same time, several challenges remain open making this area an active and flourishing field of research and development. To make real progress in building text-to-SQL systems, we need to de-mystify what has been done, understand how and when each approach can be used, and, finally, identify the research challenges ahead of us. The purpose of this survey is to present a detailed taxonomy of neural text-to-SQL systems that will enable a deeper study of all the parts of such a system. This taxonomy will allow us to make a better comparison between different approaches, as well as highlight specific challenges in each step of the process, thus enabling researchers to better strategize their quest towards the ``holy grail” of database accessibility.

Taxonomy

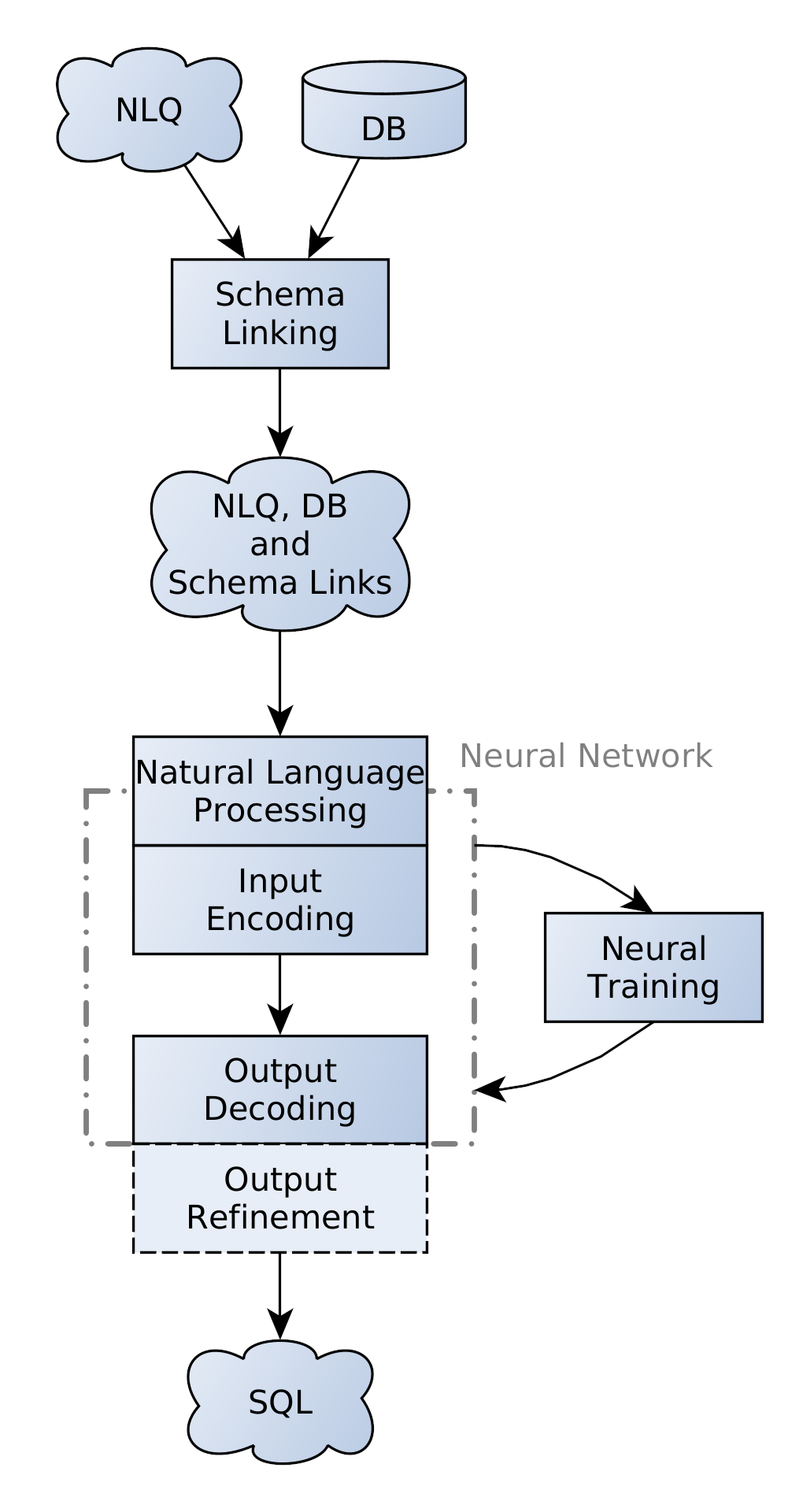

Despite the fact that deep learning approaches have only recently became popular for the text-to-SQL problem, numerous systems have already been proposed, that bring a wide variety of novelties and employ different approaches. Nevertheless, there are key parts that serve common purposes across almost all systems, which allow us to build a general model that can help us better understand them. The main input of a text-to-SQL system is a NL query (NLQ) and the database (DB) that the NLQ is posed on. The first step (whenever employed) is Schema Linking that aims at the discovery of possible mentions of database elements (tables, attributes and values) in the NLQ. These discovered schema links, along with the rest of the inputs, will be fed into the neural network that is responsible for the translation.

The core of this neural network consists mainly of two parts: the encoder and the decoder. The encoder takes one or more inputs of variable shape and transforms them into one or more internal representations with fixed shapes that are consumed by the decoder. The decoder reads the representations calculated by the encoder and makes predictions on the most probable SQL query (or parts of it). Additionally, given that the inputs (NLQ, DB, schema links) are mainly textual, the system must use a Natural Language Representation technique to create an efficient numerical representation that can be read by the encoder. Finally, the neural training dimension refers to the procedure followed for training the neural network.

The last dimension of the taxonomy is the Output Refinement, which can be applied during the decoding phase in order to reduce the possibility of errors and to achieve better results. Note that even though Output Refinement is closely related to Output Decoding and even interacts with the decoder, it is not a part of the neural network. As such, in most cases, it is possible to add or remove an output refinement technique once the system has been created and trained.

Schema Linking

Schema linking is the process of discovering which parts of the NLQ refer to which database elements.

The NLQ parts that could possibly refer to a database element are called query candidates, while the database elements that could occur in the NLQ are called database candidates.

Query candidates can be words or phrases, while database candidates can be tables, columns, and values in the database.

A connection between a query candidate and a database candidate is called a schema link, which can be further categorized as a table link or column link, when the query candidate maps to a table name or column name, respectively, and value link, when it matches a value of a column.

-

Query Candidate Discovery: How to choose query candidates

- Single Tokens

- Multi-word Candidates

- Named Entities

- Additional Candidates

-

Database Candidate Discovery: How to choose DB candidates

- Table and Column Names

- Values via Lookup

- Values via Knowledge Graphs

-

Candidate Matching: How to decide if there is a match between chosen candidates

- Exact and Partial Matching

- Fuzzy/Approximate String Matching

- Learned Embeddings

- Classifiers

- Neural Attention

Natural Language Processing

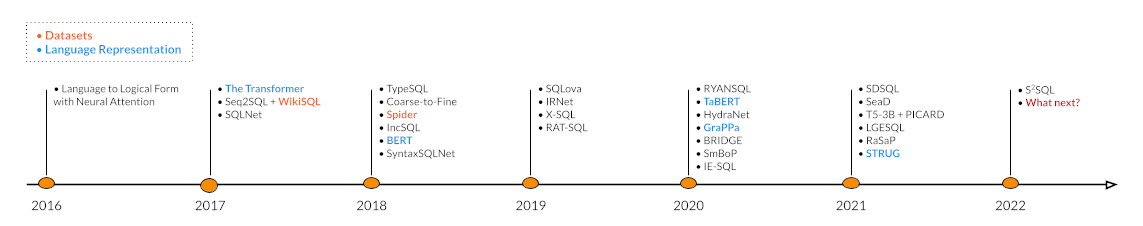

An essential step for text-to-SQL systems is creating and processing numerical representations of their NL inputs. Until recently, the most popular technique for NL representation has been pre-trained word embeddings. Recent advances in NLP, such as the introduction of the Transformer architecture followed by its use to create large Pre-trained Language Models (PLMs), has tipped the scales greatly to its favour. Additionally, as new PLMs are emerging, a new research path is being paved focusing on the design of better PLMs or PLMs created specifically for certain problems (such as the text-to-SQL problem).

- Word Embeddings: GloVe, Word2Vec, etc.

- Encoder-only Pre-trained Language Models: BERT, TaBERT, GraPPa

- Encoder-Decoder Pre-trained Language Models: BART, T5

Input Encoding

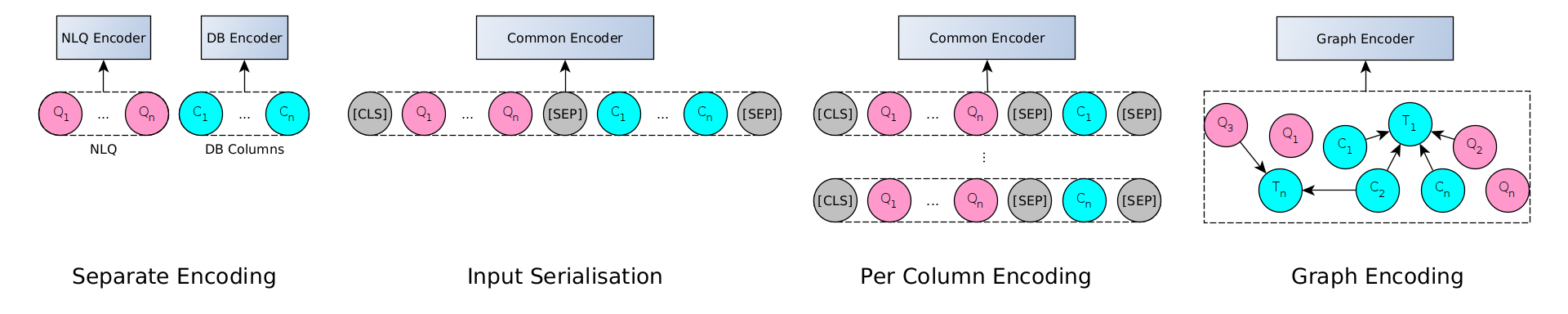

The dimension of input encoding examines how the input is structured and fed to the neural encoder of the system, so that it can be processed effectively. There are different inputs that are useful for translating a NLQ to SQL. The NLQ and the names of the DB columns and tables could be considered the minimum required input. Other features that could improve the network performance include: (a) the relationships present in the DB schema, including primary-to-foreign key relationships and relationships between columns and tables, and (b) links and additional values that have been discovered during the schema linking process.

The use of neural networks mandates the transformation of all inputs into a form that can be accepted by the network. This can be very restrictive, given how heterogeneous these types of inputs are and how difficult it is to represent them all in a single type of input.

- Separate Encoding: Use two different encoders for the NLQ and DB parts

- Serialised Encoding: Serialise all inputs into a common sequence of text

- Per-column Encoding: Encode each column separately

- Graph Encoding: Encode inputs as a graph

Output Decoding

Text-to-SQL systems following the encoder-decoder architecture can be divided into three categories based on how their decoder generates the output:

- Sequence-based Decoding: A sequence decoder creates the SQL query by generating SQL tokens one by one. This approach is prone to errors, as there is no barrier to prevent the decoder from generating syntactically and grammatically incorrect queries

- Sketch-based Decoding: A query sketch with fillable slots is pre-defined and the network must fill the slots by choosing the most likely candidate. This approach has a very limited ability to generate complex queries because it is not easy to design such a complex sketch

- Grammar-based Decoding: A sequence decoder generates grammar rules that can be applied sequentially to generate a SQL query. This approach greatly reduces the possibility of errors while enabling the generation of complex queries

Neural Training

Another dimension that must be examined when considering a neural text-to-SQL system is the methodology that is followed to train it. Even though the description of a system is usually focused around its architecture and neural layers as well as the way it encodes the inputs and decodes the output, the dimension of neural training is important, because it is the process that enables the neural network to learn how to perform the task at hand.

- Fresh Start: Train the network from scratch

- Transfer Learning: Use a part of larger network that has been pre-trained on a more generic task (e.g., PLMs)

- Additional Objectives: Train the network on similar tasks, in parallel to the text-to-SQL task

- Pre-training Specific Components: Pre-train only a few parts of the network before training the whole network

Output Refinement

Once trained, a neural model can be used for inference. There is one last dimension to consider; that of output refinement, i.e., additional techniques that can be applied on a trained model to produce even better results, or to avoid producing incorrect SQL queries.

- None

- Execution-guided Decoding: Execute partially-complete SQL queries during prediction time, and avoid predictions that create queries that cannot be executed

- Constrained Decoding: Use a pre-defined set of rules to detect predictions that can lead to erroneous queries

- Discriminative Re-ranking: Allow the system to generate more than one predictions for each query and use a different network to choose the best among them