QR2T

Verbalizing query results into natural language explanations

Try it out! QR2T is now live, feel free to test it!

Motivation

Database democratization focuses on making databases accessible to non-expert users that are not familiar with database query languages. In this direction, a lot of effort has already been put into two problems: Text-to-SQL, which focuses on translating a natural language query to SQL, and SQL-to-Text, which is the inverse problem. However, work has lagged behind in explaining query results in natural language.

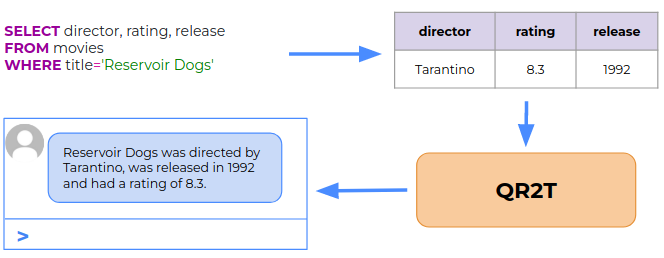

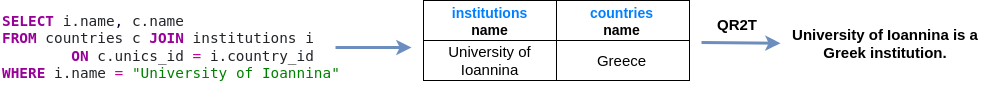

QR2T takes as input the query result and transforms it to natural language.

Architecture

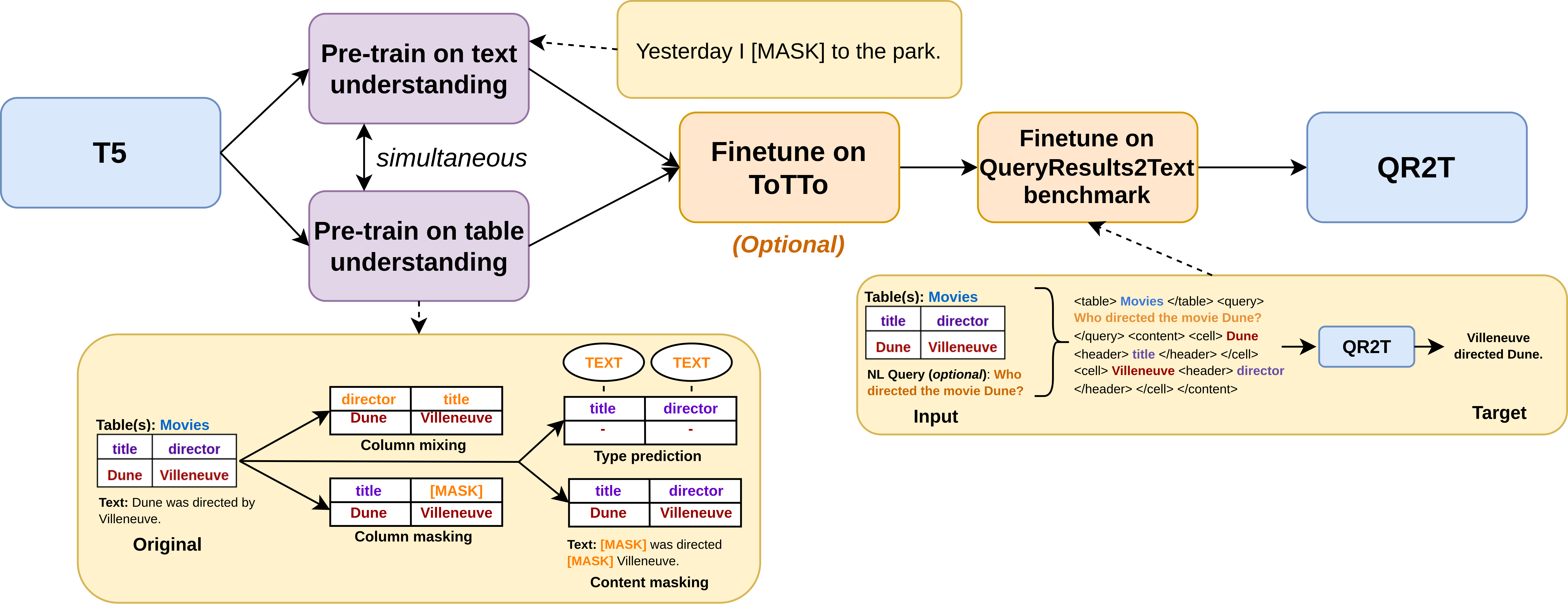

There are two main components that facilitate the pipeline. First, a preprocessing step that transforms the query so as its results include additional features that lead to a more informative verbalisation. Second, we propose pre-training T5 to a synthetic table dataset motivating the model to understand table structure, for example, the correlation between the column name and its value. Finally, we create a QR2T benchmark, which is the first dataset that contains query results verbalisations.

We utilize the knowledge and expressiveness of T5, which has proven its value in tasks like question answering, text summarization, and story generation. However, our task differs since it is not formulated as a Text-to-Text task, but as Table-to-Text. Our work focuses on injecting information to the table so as it is closer to the text format that T5 expects (preprocessing), and to making T5 understand what a table structure is (pretraining).

We utilize the knowledge and expressiveness of T5, which has proven its value in tasks like question answering, text summarization, and story generation. However, our task differs since it is not formulated as a Text-to-Text task, but as Table-to-Text. Our work focuses on injecting information to the table so as it is closer to the text format that T5 expects (preprocessing), and to making T5 understand what a table structure is (pretraining).

Preprocessing

While the results of an SQL query are usually returned in a table, many times this table does not contain necessary information that the model would require. Especially since the verbalisation is performed by T5, which is a model pre-trained in NL generation, the column names and contents should belong in the text distribution observed by T5.

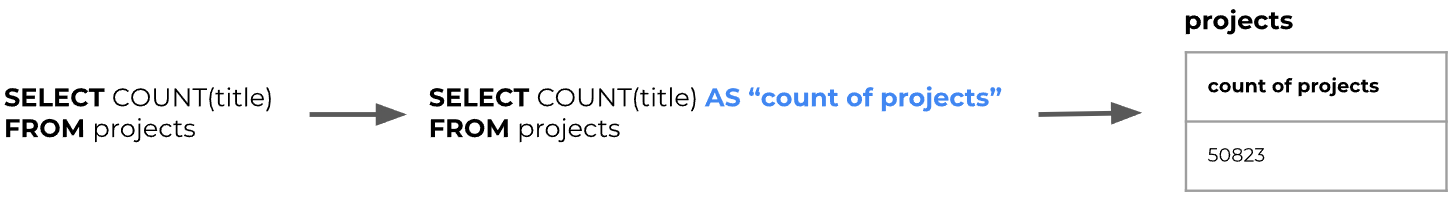

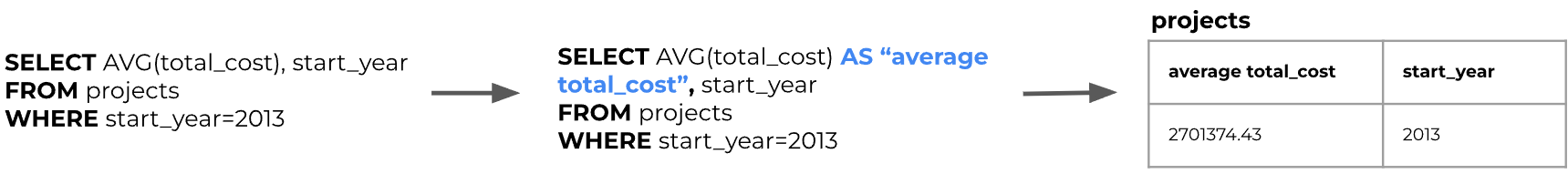

We define a set of rules that inject the table of the query results with extra information. Below, we have examples of the rules for the aggregate queries.

We define a set of rules that inject the table of the query results with extra information. Below, we have examples of the rules for the aggregate queries.

Preprocessing rule for the COUNT keyword.

Preprocessing rule for the AVG keyword.

More rules take into account JOINs, GROUP BY, and WHERE clauses in any combination.

Pretraining

As mentioned before, T5 expects text as its input while the query results are in table format. One idea is to only finetune on table2text datasets like ToTTo or our QR2T benchmark. However, these datasets may not be enough for the model to understand tables and might end up simply copying parts of the input. We propose a set of pretraining tasks aiming to teach the model what a table is like the correlation between column name and its value. We note that in parallel we also pretrain our model with the original task of predicting masked language so as the model does not forget how to generate natural language.

The pipeline aims at aiding T5 better adapt to the QR2T task.

Examples

Below we have some examples of the queries, their results in table format, and the verbalisation produced by our model.

Question: Which institutions are in Greece?

Question: When did the FlexiSTAT project started and when did it end?